A company we deal with had a pretty nasty problem recently. They were doing some major VMWare changes, including networking, and were using Storage VMotion to move from one datastore to another, on the same aggregate.

Sounds good, right? Well, there’s a well intentioned, historic, feature in NetApp’s Data ONTAP, of aggregate snapshots. The problem this company faced was due to aggregate level snapshots – while they are set to auto-delete, the blocks are not immediately freed (but the space looked available). There is a low priority free space reaper process that actually makes the blocks writable again. With the blocks unavailable, the aggregate was essentially full, leading to the usual result that WAFL exhaustion leads to, of glacial latency, which reduces the effectiveness of the space freeing process too, making it even worse. And in this case, a whole company going home. Background freeing of blocks is one of those features that makes sense – but as aggregates got bigger and bigger, and over 16TB, there exists a greater impact of whole-volume scan operations.

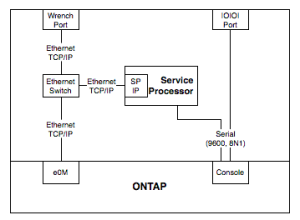

Normally you make snapshots of volumes, for connected system backups, recovery, etc. Aggregate level ones were a failsafe of last resort for ONTAP. From really early days, NetApp systems have had a battery to keep the NVMEM cache alive, even if the system looses power. When it comes back on, those changes are flushed to disk, and WAFL is once again consistent. The batteries used in the time I’ve dealt with NetApp have allowed for a 72 hour power outage. At times, however, this is not enough. ONTAP will boot, and find WAFL is inconsistent, and run essentially a fsck (wafliron). Usually that works. Sometimes, it doesn’t, and then you have a Very Big Problem. Aggregate level snapshots can save you here – the same way a LUN snapshot might save an attached system.

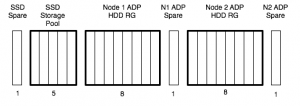

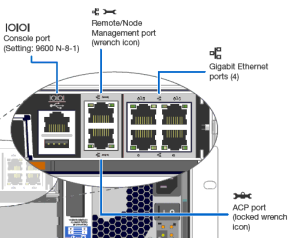

This all changed with the FAS8000 series – these systems have a battery too, but they use it to de-stage the NVMEM to an SSD. This means they can withstand outages longer than 72 hours. Which means there is no need for aggregate snapshots on the FAS8020, FAS8040, FAS8060 and FAS8080EX systems. I’d go so far as to turn them off on all systems. After the problems this company faced, I’ll be doing it for everyone.

There is one downside – aggregate snapshots can save you if you delete the wrong volume. You move any other volumes off the aggregate (yay cDOT), assuming you have another one, then revert the aggregate. This risk is usually addressed with snapvaults/snapmirrors/backup software, but it’s worth remembering. Aggregate snapshots are also used by syncmirror and Metrocluster, as outlined in this article, but in my market segment, these aren’t major uses.

This KB from NetApp recommends they be turned off for data aggregates, which like flow control on 10GbE ports, makes you wonder why this isn’t the default setting. So, check your systems folks, and turn off your aggregate snapshots, especially if you have FAS8000 systems.