ONTAP 8.3 is out right now as a release candidate, which means you can install it, but systems aren’t shipping with it. If you’re installing a new one of these entry level systems, consider carefully if it’s the right choice. From my point of view, it is, as you get much better storage efficiency. You should plan to do an upgrade from 8.3RC1 to 8.3 in Feb-Mar 2015, but with Clustered ONTAP, and the right client and share settings, it can be totally non disruptive (even to CIFS). It’s worth noting too, that for now at least, if you need to replace an ADP partitioned drive, you will probably need to call support for assistance (but it’s a free call, and they love to learn new stuff)

If you want to convert straight out of the box, it’s pretty easy:

- Download ONTAP 8.3 from support.netapp.com, the installable version, and put it in an http accessible location

- Control-C at startup time to get to the boot menu. Choose option 7 – install new software first, and enter the URL of the 8.3RC1 image from your webserver

- Once 8.3 is installed on the internal boot device, boot into maintenance mode from the boot menu and unassign all drives from each node – this isn’t covered in NetApp’s current documentation, but is required

- Reboot each node, and then choose option 4 – wipeconfig. Once the wipe is finished, the system will use ADP on the internal drives and be ready to setup

Setup of the FAS22xx and FAS25xx systems can be as easy or as complex as you’d like. They come with a USB key to run a GUI setup application, so you never need to touch the serial connection, but I’ve never used it, and the version on the key probably doesn’t support 8.3RC1, so I just do initial node and cluster setup from the CLI. Another great benefit with 8.3 is that there is now a built in OnCommand System Manager on the cluster management – so no need to ensure there’s always a machine with the right version of Java and Flash on.

Theoretically, you might be able to do a conversion to ADP with data in place by relocating all of the data to one node, and unowning and reformatting the other one, then re-joining to the cluster. I haven’t been in a position to try it, but if you have, I’d be interested to know how it went (email me@thisdomain). Some caveats on an ADP conversion – you need to have at least 4 disks per node for ADP – so the 3 current root aggr drives, and one spare. Ideally re-locate your data to the surviving node using vol move, not aggregate relocation. Then once one node is converted, vol move everything to a data aggregate the converted node, then do the same thing to the unconverted one.

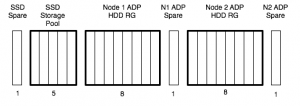

Disk slicing gives you a very valid option of a true active/passive configuration, one which was never really possible with even 7 mode. The root and the data partitions do not need to be assigned to the same node. You can assign all the data partitions to one node, while just leaving enough root partitions on the passive node, or go for the traditional active/active of two data aggregates – one per node. There are some pretty big caveats for disk spares and the importance of quick replacement of failed drives, but on a FAS2520, it’s probably worth it.

I have started writing a post on active/active vs active/passive configs a couple of times, but put it off in favour of waiting till ADP was available. The basic thought is that you want a node to be able to takeover for its HA parter without service degradation, so you want to keep each node below 50% utilization, so it would be the same as running all workloads on a single system, but maybe you’ll accept some degradation in favour of getting more use out of the system. You have lots of choices. One thing to consider is processor affinity – with more processor cores, there’s less need to schedule CPU time to volume operations, and running on more processors (ie, both nodes) gives you access to more cores. But on a FAS2520, how many volumes are you likely to have?